Terraform is an open-source, Infrastructure as Code tool, created by HashiCorp. It is a tool for building, changing, and versioning infrastructure safely and efficiently in the cloud. Infrastructure as code tool allows developers to codify infrastructure in a way that makes provisioning automated, faster, and repeatable.

Amazon S3 is an object storage that you can use to store and retrieve any amount of data, at any time, from anywhere on the web.

In this tutorial, we learn how to upload files from a laptop/PC to the AWS S3 bucket using terraform.

Requirements

- AWS account and Identity Access Management (IAM) user with a pair of access, secret key.

- Terraform installed in your system.

Step 1: Provide access key

Create a file name provider.tf and paste the following line of code. The access key and secret key are generated when you add a user in IAM. Make sure that the user has at least the privilege of AmazonS3FullAccess. Select the region that you are going to work in.

provider "aws" {

access_key = "ACCESS_KEY_HERE"

secret_key = "SECRET_KEY_HERE"

region = "us-east-1"

}Step 2: Create a bucket

Open another file in the same directory name 's3bucket.tf' and create our first bucket 'b1', name it 's3-terraform-bucket'. You might get error if the provided name bucket 's3-terraform-bucket' is not unique over the global AWS region. Another important thing is ACL which provides granular access to your bucket, make it either private or public. You can provide tag as your choice.

Also, upload the file, which is located ‘myfiles’ directory. Define resource as aws_s3_bucket_object. To refer to the bucket you just define above, get it from bucket id b1. Key is the name given to the object as of your choice. Etag is given to find if the file has been changed from its last upload using md5 sum.

# Create a bucket

resource "aws_s3_bucket" "b1" {

bucket = "s3-terraform-bucket-lab"

acl = "private" # or can be "public-read"

tags = {

Name = "My bucket"

Environment = "Dev"

}

}

# Upload an object

resource "aws_s3_bucket_object" "object" {

bucket = aws_s3_bucket.b1.id

key = "profile"

acl = "private" # or can be "public-read"

source = "myfiles/yourfile.txt"

etag = filemd5("myfiles/yourfile.txt")

}Step 2.1: To upload multiple files (optional)

If you want to upload all the files of a directory, then you need to use 'for_each' loop.

resource "aws_s3_bucket_object" "object1" {

for_each = fileset("myfiles/", "*")

bucket = aws_s3_bucket.b1.id

key = each.value

source = "myfiles/${each.value}"

etag = filemd5("myfiles/${each.value}")

}Step 3: Execute

The terraform plan command is used to create an execution plan. Terraform performs a refresh, unless explicitly disabled, and then determines what actions are necessary to achieve the desired state specified in the configuration files.

Finally to execute terraform apply and see the output.

terraform planterraform apply

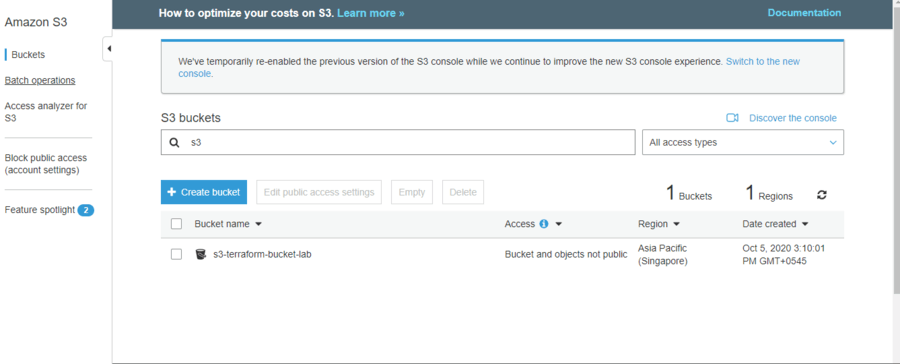

Login to your AWS console and go to S3 service. You can see there a bucket s3-terraform -bucket and the file you upload inside it.

Conclusion

We have reached the end of this article. In this guide, we have walked you through the steps required to create a bucket in AWS s3, add single or multiple files using terraform.

Comments